Assignment 6: Egg-eater: Heap Allocation

Due: Sun 11/19 at 11:59pm

git clone

In this assignment you’ll extend to implement arrays and closures, which are sort like eggs lain in the heap, if you don’t think about it too much...

1 Language and Requirements

Egg-eater starts with the same semantics as Diamondback, and adds support for two types of heap-allocated objects: arrays and closures. The language features are:

array expressions: creating values, accessing components, and mutating components

sequencing of expressions with

e1; e2closures: functions can be used as first-class values

lambda: non-recursive function values can constructed using lambda notation

lambda x,...: e end

The runtime system must add support for

Allocating array and closure values on the heap

Printing array and closure values

Read through the whole assignment below carefully, then take note of the recommended TODO list at the bottom for a suggested order to tackle these pieces.

2 Syntax Additions and Semantics

Egg-eater adds two new kinds of heap-allocated "objects": arrays and closures.

For arrays, the new syntactic forms are array expressions,

along with accessor expressions for getting and setting the contents of

arrays, a unary primitive for checking if a value is an array, and a

unary primitive for getting the length of an array. Array expressions

are a series of zero or more comma-separated expressions

enclosed in (square) brackets. An array access expression is one

expression followed by another enclosed in square brakcets, which

expresses which field to be accessed. isarray is a

primitive (like isnum and isbool) that checks

if a value is an array. Finally, length is a primitive

that produces the length of an array.

For closures we change add a new syntactic forms lambda

x1,...: e end for anonymous function values, a unary primitive

isfun for checking if a value is a function and we now

allow for the function position in a call expression to be an

arbitrary value, not just an identifier.

‹expr› ... ‹array› ‹expr› [ ‹expr› ] ‹expr› [ ‹expr› ] := ‹expr› ‹expr› ; ‹expr› isarray ( ‹expr› ) length ( ‹expr› ) ‹expr› ( ) ‹expr› ( ‹exprs› ) lambda : ‹expr› end lambda ‹params› : ‹expr› end ‹params› IDENTIFIER IDENTIFIER , ‹params› ‹exprs› ‹expr› ‹expr› , ‹exprs› ‹array› [ ] [ ‹exprs› ]

For example, we can create three arrays and access their fields:

let unit = [] in

let one = [1] in

let three = [3, 4, 5] in

three[0]An array-set expression evaluates both arguments in left-to-right order, updates the array at the appropriate index, and returns the entire tuple value as its result. We can therefore chain array-set expressions together, and write

let three = [0, 0, 0] in

((three[0] := 1)[1] := 2)[2] := 3

let pair = [0, 0] in

pair[0] := (three[1] := 10)three will be [1,10,3] and pair will be [three, 0]We add three array forms as Prim operations. Array literals

[e1,...] are parsed as MakeArray, indexing

e1[e2] is parsed as ArrayGet and updating

e1[e2] := e3 is parsed as ArraySet. Additionally,

we have an IsArray primitive for checking if a dynamically

typed value is an array. Finally, we also add a Semicolon case to the Exp to represent sequencing without introducing a binding.

enum Prim {

...

IsArray, // Unary

MakeArray, // 0 or more arguments

ArrayGet, // first arg is array, second is index

ArraySet, // first arg is array, second is index, third is new value

}

enum Exp<Ann> {

...

Semicolon {

e1: Box<Exp<Ann>>,

e2: Box<Exp<Ann>>,

ann: Ann,

},

}We add a Semicolon form to SeqExp as well, though

you may choose instead to desugar it into a Let.

For closures, we only need a few small changes to support the new

forms produced by the parser, but we add several internal-only forms

to simplify the implementation.

First, we allow our Call form to have an arbitrary expression

as the function, and add a IsFun primitive as well.

Then we have several internal-only forms: DirectCall and

ClosureCall are used to distinguish between which calls can be

statically resolved (direct) and compiled as in diamondback versus

those that must be implemented by calling a dynamically determined

closure. Next, the ExternalCall form has been updated to allow

the function pointer to be either statically determined (Label)

or dynamically determined (Var). Finally the

CheckArityAndUntag, GetCode and GetEnv forms are

used to simplify the code generation for calling closures, and the

MakeClosure form for creating closures.

enum Prim {

...

IsFun, // Unary

CheckArityAndUntag(usize), // Unary, input is arbitrary value

GetCode, // Unary, input is untagged pointer to a closure

GetEnv, // Unary, input is untagged pointer to a closure

}

enum Exp {

...

// A call that may or may not require a closure

Call(Box<Exp<Ann>>, Vec<Exp<Ann>>, Ann),

// A call to a dynamically determined closure

ClosureCall(Box<Exp<Ann>>, Vec<Exp<Ann>>, Ann),

// A direct call to a statically known function definition

DirectCall(String, Vec<Exp<Ann>>, Ann),

// A local tail call to a statically known local function definition

InternalTailCall(String, Vec<Exp<Ann>>, Ann),

// A function call to either a statically known global function or

// a dynamically determined code pointer

ExternalCall {

fun: VarOrLabel,

args: Vec<Exp<Ann>>,

is_tail: bool,

ann: Ann,

},

MakeClosure {

arity: usize,

label: String,

env: Box<Exp>,

ann: Ann,

},

}

enum VarOrLabel {

Var(String),

Label(String),

}We add similar forms to SeqExp. Note that though we have array

and closure values, MakeArray and MakeClosure are not

immediates as they perform some non-trivial execution steps:

allocating memory. Their values at runtime will always be accessed

through variables.

3 Semantics and Representation of Arrays

3.1 Array Heap Layout

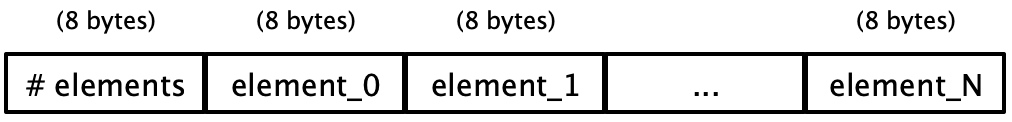

Array expressions should evaluate their sub-expressions in order from left to right, and store the resulting values on the heap. We discussed several possible representations in class for laying out arrays on the heap; the one we recommend you use for this assignment is:

That is, one word is used to store the count of the number of elements in the array, and the subsequent words are used to store the values themselves. Note that the count is an actual integer; it is not an encoded Egg-eater integer value.

An array value is stored in variables as the address of the

first word in the array’s memory, but with an additional 1 added to the value

to act as a tag. So, for example, if the start address of the above memory

were 0x0adadad0, the array value would be 0x0adadad1. With this change, we

extend the set of tag bits to the following:

Numbers:

0in the least significant bitBooleans:

111in the three least significant bitsArrays:

001in the three least significant bitsClosures:

011in the three least significant bits

Visualized differently, the value layout is:

Bit pattern |

| Value type |

|

| Number |

|

| True |

|

| False |

|

| Array |

|

| Closure |

Where W is a “wildcard” 16-bit nibble and b is a “wildcard” bit.

3.2 Accessing Array Contents

In an array access expression, like

let t = [6, 7, 8, 9] in t[1]The behavior should be:

Evaluate the expression in array position (before the brackets), then the index expression (the one inside the brackets).

Check that the array position’s value is actually an array, and signal an error containing

"indexed into non-array"if not.Check that the indexing expression is a number. Signal an error containing

"index not a number"if not.Check that the index number is a valid index for the array value —

that is, it is between 0and the stored number of elements in the array minus one. Signal an error containing"index out of bounds"Evaluate to the array element at the specified index.

These same error messages apply also to setting the value of an

array. Additionally, if at runtime, length is performed on

a non array value, the error message should include "length

called with non-array" analogous to similar previous error messages.

You can do this with just rax, but it causes some

pain. Feel free to use as scratch registers r8 and r9 as

needed (for example saving the index in r9 and using rax

to store the address of the tuple). This can save a number of

instructions. Note that we will generate code that doesn’t need to

use r8 or r9 beyond the extent of this one expression,

so there is no need to worry about saving or restoring the old value

from r9 except in the compilation of the main expression.

You also may want to use an extended syntax for mov in order to combine these

values for lookup. For example, this kind of arithmetic is allowed inside

mov instructions:

mov rax, [rax + r9 * 8 + 8]This would access the memory at the location of rax, offset by

the value of r9 * 8 + 8. So if the value in r9 were,

say 2, this may be part of a scheme for accessing the second

element of a tuple. To aid in this we have generalized the

MemRef type to allow for these dynamically computed offsets:

struct MemRef {

reg: Reg,

offset: Offset,

}

enum Offset {

Constant(i32),

Computed { // reg * factor + constant

reg: Reg,

factor: i32,

constant: i32,

},

}Neither R9 nor anything beyond the typical

Offset::Constant is required to make this work, but you

may find it simpler to compile using these. R8 and R9 are

good for short-lived scratch registers because they are

caller-save in the System V AMD 64 ABI, so you are free to use

them without saving them, but know that their values do not persist

across a call to e.g., print.

3.3 Setting up the runtime

When the Rust stub calls into your generated assembly code, you need

to initialize the heap so your allocation operates correctly. The

register r15 has been designated as the heap pointer. To

initialize the heap you should either use the technique from

Lecture 10: Tuples and Memory Allocation where we construct an array in Rust and pass it in as

an argument to start_here or you can construct an array in the

data section of your assembly file. For instance if you start the file with

section .data

HEAP: times 1024 dq 0Then the label HEAP will be resolved to an address pointing to

an array of 1024 QWORD values initialized to all 0. This is 8

kilobytes which should be enough to pass the autograder tests.

Whichever approach you take, it is up to your code to initialize the

heap pointer R15 to point to the beginning of this space and

ensure that the value of R15 is always the address of the next

block of free space (in increasing address order) in the provided

block of memory.

Additionally, r15 is a callee-save register in the System

V AMD 64 ABI. This is a sensible choice because it means we don’t need

to save it when we call into Rust functions such as print. But

this does mean that we need to save r15 to the stack upon entry

and restore it before returning. This can all be accomplished by using

the following implementation for start_here, assuming the main

expression is compiled with the label main_exp:

start_here:

push r15 ; save the original value in r15

sub rsp, 8 ; padding to ensure the correct alignment

lea r15, [rel HEAP] ; load the address of the HEAP into r15 using rip-relative addressing

call main_exp ; call into the actual code for the main expression of the program

add rsp, 8 ; remove the padding

pop r15 ; restore the original to r15

ret3.4 Interaction with Existing Features

Any time we add a new feature to a language, we need to consider its interactions with all the existing features.

We’ll take them one at a time.

Equality: The arithmetic expressions should continue to only allow numbers, and signal errors on tuple values. There is one binary operator that doesn’t check its types, however:

==. We need to decide what the behavior of==is on tuple values and closure values. Note that we have a (rather important) choice here. Clearly, this program should evaluate totrue:let t = [4, 5] in t == tHowever, we need to decide if

[4,5] == [4,5]should evaluate to

trueorfalse. For this assignment we will consider these arguments to be not equal because they are not implemented as the same value on the heap, so if one was mutated, the other would not be. That is, we will use pointer equality rather than structural equality.We will similarly consider two closures to be equal only when they are the same underlying pointer in memory.

Print: The behavior of the unary operators is straightforward, with the exception that we need to implement

printfor tuples and closures.Closures should really be opaque values that you can only interact with by calling, so we will simply print

"<closure>"when we encounter one.For arrays, we could just print the address, but that would be somewhat unsatisfying. Instead, we should recursively print the tuple contents, so that the program

print([4, [true, 3]])actually prints the string

"[4, [true, 3]]". This will require some additional work with pointers instub.rs. A useful hint is to create a recursive helper function forsprint_snake_valthat traverses the nested structure of tuples and prints single values. To help you working with raw bytes in Rust, we have provided a functionload_snake_arraythat takes a pointer to an array in the heap and "parses" it into a struct consisting of a size and a pointer to the first element of the array. You can then access the other elements of the array by using the.add(n)method on pointers. You will need to useunsafecode to implement this, of course.The interesting case is when there is a cyclic heap value. For instance the following program

let pair = [ 0 , 1 ] in pair[1] := pair; paircreates a cyclic linked list in memory, and naively traversing it would cause an infinite recursion. A small number of test cases will check for correctly implementing printing of cyclic values like this, so you should likely put a bound on your initial print function or the autograder might take very long to run.

In the presence of a cyclic value you should print

"<loop>"where the first loop occurs. For instance the above cyclic list should be printed as"[0, <loop>]".

4 Implementing Closures

4.1 Changes to static and dynamic errors

First, we need to consider how static and dynamic error messages are affected by making functions first-class values.

We no longer need a separate

UndefinedFunctionerror, as functions will be in the same namespace as other values and so we will just use the generalUnboundVariableerror.We no longer need a

FunctionUsedAsValueerror, as functions can be used as values!We will also no longer have a static

ValueUsedAsFunctionerror, but we need instead to have a dynamic error when a number, boolean or array is used as the function in a call. In this case, your code should error with a message that includes the string "called a non-function".Similarly, we will no longer have a static

FunctionCalledWrongAritymessage, as this check will be delayed to runtime. When a function is called with the wrong number of arguments at runtime, your code should raise an error which includes the string "wrong number of arguments".

4.2 Implementing Closures by translation

At a high level, the core features of closures are a combination of heap-allocated objects and function calls. So when implementing closures, we will translate them into internally used forms for constructing and using closure objects as well as our pre-existing forms for function calls that we implemented in diamondback.

We break this down into several steps:

First, optimization pass to eliminate unneccessary closures:

Then update your lambda lifting pass to lift function definitions for closures and generate IR code to construct mutually recursive closures

Finally, update the code generation for external calls to handle dynamically determined calls as well as the new primitive forms.

First, you should implement an optimization pass

eliminate_closures<Ann> : &Exp<Ann> -> Exp<()> that translates

Calls to either DirectCall for those where the function

being called can be resolved to a statically known label and

ClosureCall for where the function being called is a

dynamically determined closure. If this pass is implemented correctly,

valid diamondback programs should be compiled with minimal performance

hit. As an example, in the following program there are two function

calls:

def f(x,y): ... in

let arr = [f] in

let r = f(0,1) in

let g = arr[0] in

g(r, 2)The first call f(0,1) is easily determined to be a call to

a known function definition and so should be translated to a

DirectCall. The second call, g(r, 2) is to a

variable g that does not correspond to a known function

definition and so should be translated to a

ClosureCall1notice that if we did some further

simplifications to this program, we would be able to figure out that

g will be f. This shows how different

optimizations feed into each other..

Next, you’ll need to update your lambda lifting procedure to handle

lambda functions and mutually recursive

closures. First, we need to lift not just functions that get non-tail

calls but also any function definition that ends up being used as a

closure. For the details of the updpated translation, refer to the

lecture and notes on closures. You should use generate intermediate

code that uses the MakeClosure, CheckArityAndUntag,

GetCode and GetEnv internal forms that we have

provided. Additionally, the function in an ExternalCall can now

be either a dynamically determined variable or a statically resolved

function name that will correspond to a label in code generation.

Finally update your code generation to implement these new forms as

well as the dynamically determined ExternalCalls. To support

dynamically determined calls we have updated all jmp and

call forms to take a register as an argument. Additionally, we

have added a special Instr in asm.rs called

RelativeLoadAddress(r, l) which is the instruction lea r,

[rel l] which uses RIP-relative addressing to load a label into a

register, which is the easiest way to load the address corresponding

to a label into a register that works on Mac OS X. This instruction will be

useful for implementing MakeClosure.

5 Recommended TODO List

Try implementing an interesting test cases using lists, binary trees or another interesting recursive datatypes in Egg-eater. Include one of these examples as

interesting.eggin theexamples/directory.Add your existing code, adding

panic!for the new cases (arrays, lambda) and changed cases (function calls).Write tests for the array features.

Extend

check_progto handle arrays, and adapt it to correctly handle the changed error messages relating to functions.Extend your passes (

uniquify, lambda_lift,sequentialize) to support arrays.Implement code generation for arrays.

Implement printing arrays in Rust.

Write tests for closures/lambdas.

Extend

lambda_liftto translatelambdas to uses ofmake_closure.Implement code generation for

make_closureandcall_closure.Extend your implementation to handle recursive closures. We discussed several methods for this in class (directly, Landin’s knot and the Y combinator) and you are free to use whichever you wish.

6 List of Deliverables

your test input programs (

examples/*.eggfiles), specifically at least oneexamples/interesting.eggyour

compile.rsthe other src/*.rs files in the starter code

any additional modules you saw fit to write

your

runtime/stub.rsthe Cargo.toml

integration tests (

tests/examples.rs)

Again, please ensure cargo builds your code properly.

The autograder will give you an automatic 0 if it cannot compile your code!

7 Grading Standards

For this assignment, you will be graded on

Whether your code implements the specification (functional correctness),

Whether you include an interesting example file

interesting.egg. We’ll have a low bar for interestingness but try to have fun with it!

Note that the autograder has hidden tests worth 10% of the total autograder score so be sure to test your code thoroughly.

8 Submission

Wait! Please read the assignment again and verify that you have not forgotten anything!

Please submit your homework to gradescope by the above deadline.

1notice that if we did some further

simplifications to this program, we would be able to figure out that

g will be f. This shows how different

optimizations feed into each other.